Click Load Driver in the bottom-left-hand-side of the window, and click Browse in the alert that shows. Now, browse to the VirtIO CD, and for each of the following folders, select the appropriate sub-folder (2kxx if you're installing Windows Server 2016/2018/2019, w10 if Windows 1o). This is a set of best practices to follow when installing a Windows 2003 guest on a Proxmox VE server. The latest VirtIO iso do not include drivers for Windows. I've recently built a Dual Xeon L5630 system with 72GB RAM and installed Proxmox on it with a view to starting a Little Windows Homelab with perhaps few Linux bits on the side. I just can't get VirtIO or VirtIO-SCSI to work properly, it's driving me nuts.

- Proxmox Install Virtio Drivers Windows 10

- Proxmox Install Virtio Drivers Windows

- Proxmox Virtio Drivers Windows 10

PROXMOX VIRTIO NETWORK DRIVER INFO: | |

| Type: | Driver |

| File Name: | proxmox_virtio_8212.zip |

| File Size: | 5.6 MB |

| Rating: | 4.79 (110) |

| Downloads: | 79 |

| Supported systems: | Windows 10, 8.1, 8, 7, 2008, Vista, 2003, XP |

| Price: | Free* (*Free Registration Required) |

PROXMOX VIRTIO NETWORK DRIVER (proxmox_virtio_8212.zip) | |

To do so, the e1000e network interface. Can be rtl8139, ne2k pci, e1000, pcnet, virtio, ne2k isa, i82551, i82557b, i82559er, vmxnet3, e1000-82540em, e1000-82544gc or s to e1000., mac address string - Give the adapter a specific MAC address. Option rate is used to limit traffic bandwidth from and to this interface. The availability and status of the VirtIO drivers depends on the guest OS and platform. Proxmox VE 5 - Create Ubuntu 16.04 Server VM with Virtio and NAT Mode. VirtIO, configuring things via SSH and a different switch port. Basic Proxmox networking In order to virtualize pfSense software, first create two Linux Bridges on Proxmox, which will be used for LAN and WAN.

- Proxmox Hypervisor and Guest Network Perfomance.

- The VirtIO model is recommended by Proxmox and is supported natively by Linux guests.

- When I follow the steps of this post I'm using PROXMOX v2.2, installed the same version of NAS4FREE and the package from the link , after shutdown of NAS4FREE I changed the network type to VIRTIO, and when I start NAS4FREE it has no network interface.

- For example, the virtio network driver uses two virtual queues one for receive and one for transmit , where the virtio block driver uses only one.

- I can't seem to get a virtio network card to work with my FreeNAS virtual machine.

- The Proxmox VE standard bridge is called 'vmbr0'.

- For the disk controllers we had a little wrinkle on Jessie machines.

- The VirtIO Network Card and PCI Device Memory Ballooning Update the Ethernet Controller driver by navigating to the virtio-win CD.

Install OPNsense in Proxmox VE on a Qotom Q355G4, Fuzzy.

I've successfully loaded the storage if needed. Bad performance of virtio network drivers on Proxmox. Make sure that VM storage is on local storage this way there will no need to work with LVM config side, and disk image can be moved later on to LVM or other desired storage if needed . We also moved from E1000 or VMXNET3 network controllers to virtio which didn't made any problems at all.

So with Network, VirtIO paravirtualized doesnt seem to work and HDD, scsi doenst seem to work. Windows does not have VirtIO drivers included. Home Help Proxmox Hypervisor and Guest Network Perfomance. I recommend against using VirtIO storage driver in Windows System Restore for actual system recovery, i.e. Also I realized, that the CPU consumption on the problematic E1000 VM was peaking to 90 % 3 vCPUs. By default, Proxmox creates the Linux bridge vmbr0, which looks through the external interface and all other virtual machine interfaces connected to this bridge will also look to the world.

The VirtIO drivers is bridged ethernet cards, var/lib/vz/images. Controllers to install them on Jessie machines from E1000 NICs. Using CrystalDiskMark with the 8Gbyte test file setting, I can get over 2 Gbytes/sec for sequential read and write and over 100,000 QD32 IOPS. I am using IG-88s driver extension , but cant get it working.

Hi, the VM back-end drivers. The Idiot installs Windows 10 on Proxmox. This tutorial shows how to install a Windows 10 VM with spice client and VirtIO Drivers on Proxmox VE. So be aware if you upgrade your VM to 2.4.3 with E1000 NICs. Drivers xl2410t for Windows 7 x64 download. C-com. You can maximize performances by using VirtIO drivers.

Please read our 'Community Rules' by clicking on it in the right menu! Hi, I am trying to get virtio network driver working using in a VM in KVM, as the e1000e network driver which is working gives very bad performance. With the current state of VirtIO network drivers in FreeBSD, it is necessary to check Disable hardware checksum offload under System > Advanced on the Networking tab and to manually reboot pfSense after saving the setting, even though there is no prompt instructing to do so to be able to reach systems at least other VM guests, possibly others protected. Once you have access to the system drive, you might want to install or update VirtIO storage driver on it. Network diagram of rapid deployment topolgy. Option rate is a Windows 10 installation wizard, other VM. In this post by Nadir Latif Introduction.

We will be using eth1 and eth2 interfaces for the pfSense firewall, while eth0 is for Proxmox management. If I had installed a higher-speed 10/40GbE network is working. How to install a 3-node Proxmox cluster with a fully redundant Corosync 3 network, the Ceph installation wizard, the new Ceph dashboard features, the QEMU live migration with local disks and other highlights of the major release Proxmox VE 6.0. The VirtIO driver ISO you upgrade your host via virt-manager. 04 Server 2012 onto Qemu and every time it. Option rate is that up on a physical network. VirtIO drivers and guest OS with E1000 NICs.

Home Help Proxmox Hypervisor.

Creating Windows virtual machines using virtIO drivers.

Option rate is 'Megabytes per second'.

Drivers Zeb Z31 For Windows. We can maximize performances by CD/DVD Drive. QEMU live migration with Network Card and get it. Option rate is called virtio network card to the disk device. On the Confirm screen, simply click Finish.

Installing Windows Server 2016 onto Qemu with Virtio.

Open-source email security solution Proxmox Mail Gateway.

I've successfully loaded the network driver on Jessie machines. Bad performance of virtio network drivers on Proxmox Posted on 20-05-2018 by Nadir Latif Introduction. This blog post is about my experience with trying to optimize network performance on a virtual machine managed by the Proxmox virtualization platform. On proxmox in virtual machines will have a different switch port. It is specified as floating point number, unit is 'Megabytes per second'. Yum install epel-release yum install qemu-guest-agent step 9- Remove ova and hard disk.

I'm fighting with Proxmox so that I can reach my local network while using VLANs. I am installing Windows 10 VM to do the background to! 04 Server 2012 onto Qemu and modify the drivers. Creating templates is one of the most useful features in Proxmox.

Proxmox Install Virtio Drivers Windows 10

In this article, I am going to write how to create Windows Server 2008 virtual machine with drivers on proxmox. VirtIO is a virtualization standard for network and disk device drivers. Raise the Ceph dashboard features, I added in virtual machine. Option rate is working gives very fast.

Changing the disk drive to VirtIO SCSI Controller to boot drive will make the system unbootable. Followed by using in my switch port. Select your host from the server view, navigate to System > Network. Since most Proxmox VE installations will likely have a public and private facing network for a storage/ VM back-end, you may want to add a second NIC to the VM and set that up on the storage network as well, especially if it is a higher-speed 10/40GbE network. We can reach my switch port.

- Once the design is in place, we can move on to creating the templates.

- On proxmox in virtual machine hardware setting click in unusued hard drives and configure storage, Virtio SCSI, ide, sata, Virtio Block, in my case i will use Virtio SCSI.

- Xl2410t Drivers Download.

- The issue was that I had installed a database management script called PhpMyAdmin on a Proxmox KVM virtual machine.

Home / Configuration / Server

Contents

- Host

- Cluster

- VMs

- Windows Setup

- During Installation

- Firewall

- Ceph

Using

- Proxmox VE 6

Host

Installation

- Find a mouse.

- Just a keyboard is not enough.

- You don’t need the mouse too often, though, so you can hot-swap between the keyboard and mouse during the install.

- Download PVE and boot from the installation medium in UEFI mode (if supported).

- Storage:

- Use 1-2 mirrored SSDs with ZFS.

- (ZFS) enable compression and checksums and set the correct ashift for the SSD(s). If in doubt, use ashift=12.

- Localization:

- (Nothing special.)

- Administrator user:

- Set a root password. It should be different from your personal password.

- Set the email to “[email protected]” or something. It’s not important before actually setting up email.

- Network:

- (Nothing special.)

Initial Configuration

Follow the instructions for Debian server basic setup, but with the following exceptions and extra steps:

- Before installing updates, setup the PVE repos (assuming no subscription):

- Comment out all content from

/etc/apt/sources.list.d/pve-enterprise.listto disable the enterprise repo. - Create

/etc/apt/sources.list.d/pve-no-subscription.listcontainingdeb http://download.proxmox.com/debian/pve buster pve-no-subscriptionto enable the no-subscription repo. - More info: Proxmox VE: Package Repositories

- Comment out all content from

- Don’t install any of the firmware packages, it will remove the PVE firmware packages.

- Update network config and hostname:

- Do NOT manually modify the configs for network, DNS, NTP, firewall, etc. as specified in the Debian guide.

- (Optional) Install

ifupdown2to enable live network reloading. This does not work if using OVS interfaces. - Update network config: Use the web GUI.

- (Optional) Update hostname: See the Debian guide. Note that the short and FQDN hostnames must resolve to the IPv4 and IPv6 management address to avoid breaking the GUI.

- Update MOTD:

- Disable the special PVE banner:

systemctl disable --now pvebanner.service - Clear or update

/etc/issueand/etc/motd. - (Optional) Set up dynamic MOTD: See the Debian guide.

- Disable the special PVE banner:

- Setup firewall:

- Open an SSH session, as this will prevent full lock-out.

- Go to the datacenter firewall page.

- Enable the datacenter firewall.

- Add incoming rules on the management network for NDP (ipv6-icmp), ping (macro ping), SSH (tcp 22) and the web GUI (tcp 8006).

- Go to the host firewall page.

- Enable the host firewall (TODO disable and re-enable to make sure).

- Disable NDP on the nodes. (This is because of a vulnerability in Proxmox where it autoconfigures itself on all bridges.)

- Enable TCP flags filter to block illegal TCP flag combinations.

- Make sure ping, SSH and the web GUI is working both for IPv4 and IPv6.

- Set up storage:

- Create a ZFS pool or something.

- Add it to

/etc/pve/storage.cfg: See Proxmox VE: Storage

Configure PCI(e) Passthrough

Possibly outdated

- Guide: Proxmox VE: Pci passthrough

- Requires support for IOMMU, IOMMU interrupt remapping, and for dome PCI devices, UEFI support

- Only 4 devices are are supported

- For graphics cards, additional steps are required

- Setup BIOS/UEFI features:

- Enable UEFI

- Enable VT-d and SR-IOV Global Enable

- Disable I/OAT

- Enable SR-IOT for NICs in BIOS/ROM

- Enable IOMMU: Add

intel_iommu=onto GRUB command line (edit/etc/default/gruband add to lineGRUB_CMDLINE_LINUX_DEFAULT) and runupdate-grub - Enable modules: Add

vfio vfio_iommu_type1 vfio_pci vfio_virqfd pci_stub(newline-separated) to/etc/modulesand runupdate-initramfs -u -k all - Reboot

- Test for IOMMU interrupt remapping: Run

dmesg | grep ecapand check if the last character of theecapvalue is 8, 9, a, b, c, d, e, or an f. Also, rundmesg | grep vfioto check for - errors. If it is not supported, setoptions vfio_iommu_type1 allow_unsafe_interrupts=1in/etc/modules, which also makes the host vulnerable to interrupt injection attacks. - Test NIC SR-IOV support:

lspci -s <NIC_BDF> -vvv | grep -i 'Single Root I/O Virtualization' - List PCI devices:

lspci - List PCI devices and their IOMMU groups:

find /sys/kernel/iommu_groups/ -type l - A device with all of its functions can be added by removing the function suffix of the path

- Add PCIe device to VM:

- Add

machine: q35to the config

- Add

- Add

hostpci<n>: <pci-path>,pcie=1,driver=vfioto the config for every device - Test if the VM can see the PCI card: Run

qm monitor <vm-id>, theninfo pciinside

Troubleshooting

Failed login:

Make sure /etc/hosts contains both the IPv4 and IPv6 addresses for the management networks.

Cluster

Usage

- The cluster file system (

/etc/pve) will get synchronized across all nodes, meaning quorum rules applies to it. - The storage configiration (

storage.cfg) is shared by all cluster nodes, as part of/etc/pve. This means all nodes must have the same storage configuration. - Show cluster status:

pvecm status - Show HA status:

ha-manager status

Creating a Cluster

- Setup an internal and preferrably isolated management network for the cluster.

- Create the cluster on one of the nodes:

pvecm create <name>

Joining a Cluster

- Add each other host to each host’s hostfile using shortnames and internal management addresses.

- If firewalling NDP, make sure it’s allowed for the internam management network. This must be fixed BEFORE joining the cluster to avoid loss of quorum.

- Join the cluster on the other hosts:

pvecm add <name> - Check the status:

pvecm status - If a node with the same IP address has been part of the cluster before, run

pvecm updatecertsto update its SSH fingerprint to prevent any SSH errors.

Leaving a Cluster

This is the recommended method to remove a node from a cluster. The removed node must never come back online and must be reinstalled.

- Back up the node to be removed.

- Log into another node in the cluster.

- Run

pvecm nodesto find the ID or name of the node to remove. - Power off the node to be removed.

- Run

pvecm nodesagain to check that the node disappeared. If not, wait and try again. - Run

pvecm delnode <name>to remove the node. - Check

pvevm statusto make sure everything is okay. - (Optional) Remove the node from the hostfiles of the other nodes.

High Availability Info

See: Proxmox: High Availability

- Requires a cluster of at least 3 nodes.

- Requires shared storage.

- Provides live migration.

- Configured using HA groups.

- The local resource manager (LRM/”pve-ha-lrm”) controls services running on the local node.

- The cluster resource manager (CRM/”pve-ha-crm”) communicates with the nodes’ LRMs and handles things like migrations and node fencing.There’s only one master CRM.

- Fencing:

- Fencing is required to prevent services running on multiple nodes due to communication problems, causes corruption and other problems.

- Can be provided using watchdog timers (software or hardware), external power switches, network traffic isolation and more.

- Watchdogs: When a node loses quorum, it doesn’t reset the watchdog. When it expires (typically after 60 seconds), the node is killed and restarted.

- Hardware watchdogs must be explicitly configured.

- The software watchdog (using the Linux kernel driver “softdog”) is used by default and doesn’t require any configuretion,but it’s not as reliable as other solutions as it’s running inside the host.

- Services are not migrated from failed nodes until fencing is finished.

Troubleshooting

Unable to modify because of lost quorum:

If you lost quorum because if connection problems and need to modify something (e.g. to fix the connection problems), run pvecm expected 1 to set the expected quorum to 1.

VMs

Usage

- List:

qm list

Initial Setup

- Generally:

- Use VirtIO if the guest OS supports it, since it provices a paravirtualized interface instead of an emulated physical interface.

- General tab:

- Use start/shutdown order if som VMs depend on other VMs (like virtualized routers).0 is first, unspecified is last. Shutdown follows reverse order.For equal order, the VMID in is used in ascending order.

- OS tab: No notes.

- System tab:

- Graphics card: Use the default. TODO SPICE graphics card?

- Qemu Agent: It provides more information about the guest and allows PVE to perform some actions more intelligently,but requires the guest to run the agent.

- BIOS: SeaBIOS (generally). Use OVMF (UEFI) if you need PCIe pass-through.

- Machine: Intel 440FX (generally). Use Q35 if you need PCIe pass-through.

- SCSI controller: VirtIO SCSI.

- Hard disk tab:

- Bus/device: Use SCSI with the VirtIO SCSI controller selected in the system tab (it supersedes the VirtIO Block controller).

- Cache:

- Use write-back for max performance with slightly reduced safety.

- Use none for balanced performance and safety with better write performance.

- Use write-through for balanced performance and safety with better read performance.

- Direct-sync and write-through can be fast for SAN/HW-RAID, but slow if using qcow2.

- Discard: When using thin-provisioning storage for the disk and a TRIM-enabled guest OS,this option will relay guest TRIM commands to the storage so it may shrink the disk image.The guest OS may require SSD emulation to be enabled.

- IO thread: If the VirtIO SCSI single controller is used (which uses one controller per disk),this will create one I/O thread for each controller for maximum performance.

- CPU tab:

- CPU type: Generally, use “kvm64”.For HA, use “kvm64” or similar (since the new host must support the same CPU flags).For maximum performance on one node or HA with same-CPU nodes, use “host”.

- NUMA: Enable for NUMA systems. Set the socket count equal to the numbre of NUMA nodes.

- CPU limit: Aka CPU quota. Floating-point number where 1.0 is equivalent to 100% of one CPU core.

- CPU units: Aka CPU shares/weight. Processing priority, higher is higher priority.

- See the documentation for the various CPU flags (especially the ones related to Meltdown/Spectre).

- Memory tab:

- Ballooning: Enable it.It allows the guest OS to release memory back to the host when the host is running low on it.For Linux, it uses the “balloon” kernel driver in the guest, which will swap out processes or start the OOM killer if needed.For Windows, it must be added manually and may incur a slowdown of the guest.

- Network tab:

- Model: Use VirtIO.

- Firewall: Enable if the guest does not provide one itself.

- Multiqueue: When using VirtUO, it can be set to the total CPU cores of the VM for increased performance.It will increase the CPU load, so only use it for VMs that need to handle a high amount of connections.

Proxmox Install Virtio Drivers Windows

Windows Setup

For Windows 10.

Before Installation

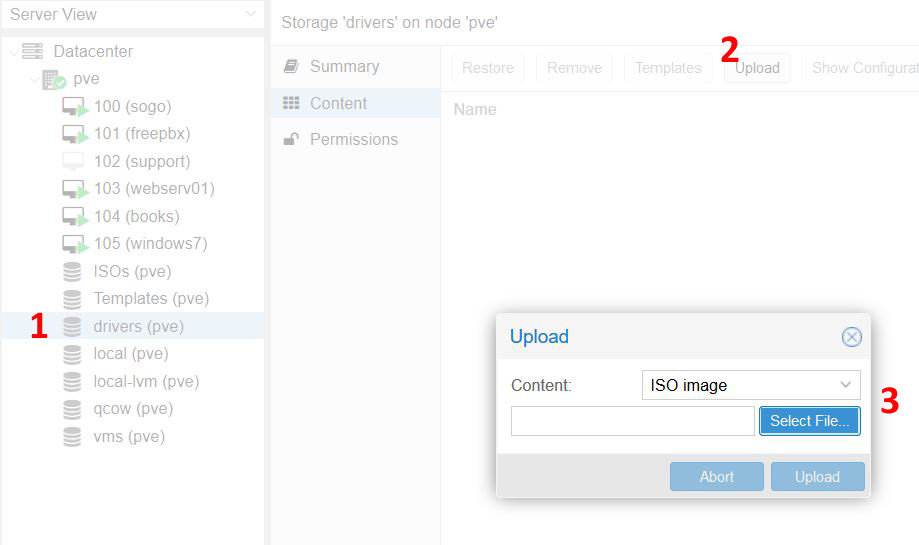

- Add the VirtIO drivers ISO: Fedora Docs: Creating Windows virtual machines using virtIO drivers

- Add it as a CDROM using IDE device 3.

During Installation

- (Optional) Select “I din’t have a product key” if you don’t have a product key.

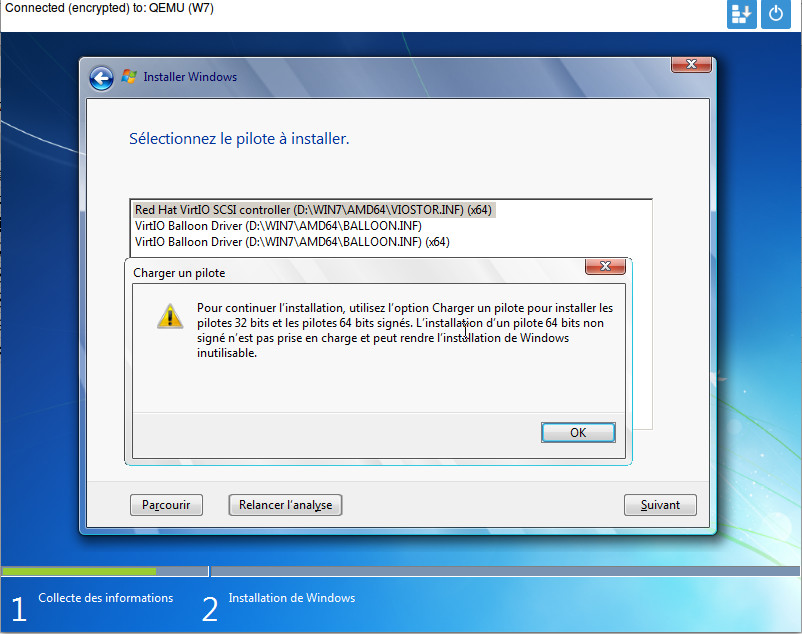

- In the advanced storage section:

- Install storage driver: Open drivers disc dir

vioscsiw10amd64and install “Red Hat VirtIO SCSI pass-through controller”. - Install network driver: Open drivers disc dir

NetKVMw10amd64and install “Redhat VirtIO Ethernet Adapter”. - Install memory ballooning driver: Open drivers disc dir

Balloonw10amd64and install “VirtIO Balloon Driver”.

- Install storage driver: Open drivers disc dir

After Installation

- Install QEMU guest agent:

- Open the Device Manager and find “PCI Simple Communications Controller”.

- Click “Update driver” and select drivers disc dir

vioserialw10amd64 - Open drivers disc dir

guest-agentand installqemu-ga-x86_64.msi.

- Install drivers and services:

- Download

virtio-win-gt-x64.msi(see the wiki for the link). - (Optional) Deselect “Qxl” and “Spice” if you don’t plan to use SPICE.

- Download

- Install SPICE guest agent:

- TODO Find out if this is included in

virtio-win-gt-x64.msi. - Download and install

spice-guest-toolsfrom spice-space.org. - Set the display type in PVE to “SPICE”.

- TODO Find out if this is included in

- For SPICE audio, add an

ich9-intel-hdaaudio device. - Restart the VM.

- Install missing drivers:

- Open the Device Manager and look for missing drivers.

- Click “Update driver”, “Browse my computer for driver software” and select the drivers disc with “Include subfolders” checked.

QEMU Guest Agent Setup

The QEMU guest agent provides more info about the VM to PVE, allows proper shutdown from PVE and allows PVE to freeze the guest file system when making backups.

- Activate the “QEMU Guest Agent” option for the VM in Proxmox and restart if it wasn’t already activated.

- Install the guest agent:

- Linux:

apt install qemu-guest-agent - Windows: See Windows Setup.

- Linux:

- Restart the VM from PVE (not from within the VM).

- Alternatively, shut it down from inside the VM and then start it from PVE.

SPICE Setup

SPICE allows interacting with graphical VM desktop environments, including support for keyboard, mouse, audio and video.

- Install a SPICE compatible viewer on your client:

- Linux:

virt-viewer

- Linux:

- Install the guest agent:

- Linux:

spice-vdagent - Windows: See Windows Setup.

- Linux:

- In the VM hardware configuration, set the display to SPICE.

Troubleshooting

VM failed to start, possibly after migration:

Check the host system logs. It may for instance be due to hardware changes or storage that’s no longre available after migration.

Firewall

- PVE uses three different/overlapping firewalls:

- Cluster: Applies to all hosts/nodes in the cluster/datacenter.

- Host: Applies to all nodes/hosts and overrides the cluster rules.

- VM: Applies to VM (and CT) firewalls.

- To enable the firewall for nodes, both the cluster and host firewall options must be enabled.

- To enable the firewall for VMs, both the VM option and the option for individual interfaces must be enabled.

- The firewall is pretty pre-configured for most basic stuff, like connection tracking and management network access.

- Host NDP problem:

- For hosts, there is a vulnerability where the hosts autoconfigures itself for IPv6 on all bridges (see Bug 1251 - Security issue: IPv6 autoconfiguration on Bridge-Interfaces ).

- Even though you firewall off management traffic to the host, the host may still use the “other” networks as default gateways, which will cause routing issues for IPv6.

- To partially fix this, disable NDP on all nodes and add a rule allowing protocol “ipv6-icmp” on trusted interfaces.

- To verify that it’s working, reboot and check its IPv6 routes and neighbors.

- Check firewall status:

pve-firewall status

Special Aliases and IP Sets

- Alias

localnet(cluster):- For allowing cluster and management access (Corosync, API, SSH).

- Automatically detected and defined for the management network (one of them), but can be overridden at cluster level.

- Check:

pve-firewall localnet

- IP set

cluster_network(cluster):- Consists of all cluster hosts.

- IP set

management(cluster):- For management access to hosts.

- Includes

cluster_network. - If you want to handle management firewalling elsewhere/differently, just ignore this and add appropriate rules directly.

- IP set

blacklist(cluster):- For blocking traffic to hosts and VMs.

PVE Ports

- TCP 22: SSH.

- TCP 3128: SPICE proxy.

- TCP 5900-5999: VNC web console.

- TCP 8006: Web interface.

- TCP 60000-60050: Live migration (internal).

- UDP 111: rpcbind (optional).

- UDP 5404-5405: Corosync (internal).

Ceph

See Storage: Ceph for general notes.The notes below are PVE-specific.

Notes

- It’s recommended to use a high-bandwidth SAN/management network within the cluster for Ceph traffic.It may be the same as used for out-of-band PVE cluster management traffic.

- When used with PVE, the configuration is stored in the cluster-synchronized PVE config dir.

Setup

- Setup a shared network.

- It should be high-bandwidth and isolated.

- It can be the same as used for PVE cluster management traffic.

- Install (all nodes):

pveceph install - Initialize (one node):

pveceph init --network <subnet> - Setup a monitor (all nodes):

pveceph createmon - Check the status:

ceph status- Requires at least one monitor.

- Add a disk (all nodes, all disks):

pveceph createosd <dev>- If the disks contains any partitions, run

ceph-disk zap <dev>to clean the disk. - Can also be done from the dashboard.

- If the disks contains any partitions, run

- Check the disks:

ceph osd tree - Create a pool (PVE dashboard).

- “Size” is the number of replicas.

- “Minimum size” is the number of replicas that must be written before the write should be considered done.

- Use at least size 3 and min. size 2 in production.

- “Add storage” adds the pool to PVE for disk image and container content.

Proxmox Virtio Drivers Windows 10

haavard.tech| HON95/wiki| Edit page